Hugging Face is a popular platform that hosts thousands of ready-to-use, state-of-the-art AI models. But have you ever considered running these on your site, or in an extension in the browser?

Thanks to model quantization and the rising popularity of smaller models, running AI on a desktop computer or, indeed, in the browser is increasingly possible. This opens the door to new interface experiences on the web, ranging from text creation/rewriting tools to AI-powered games in the web browser.

The web as a platform is starting to investigate ways that make it possible to run AI in the browser, from the Chrome prompt API to Transformers.js (based on the in-development WebGPU APIs), and a new option is now available with AiBrow. It brings a web extension that brings native GPU and CPU LLM performance, with a multi-browser compatible Prompt API.

Use Hugging Face models with AiBrow

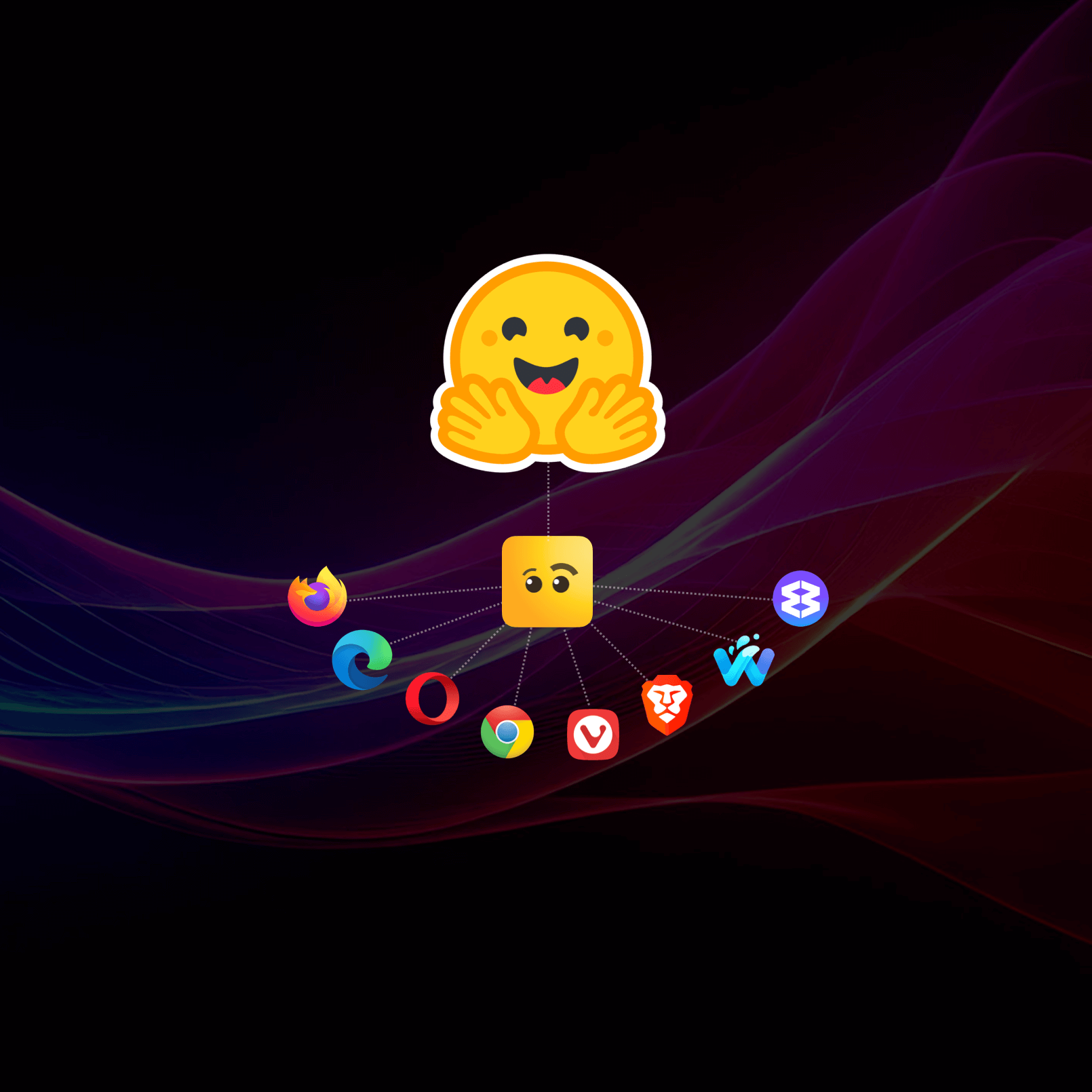

AiBrow is a browser extension that brings its own AI Models and those from Hugging Face to any webpage using the popular llama-cpp bindings under the hood. This allows you to reliably add AI to your site and supports a broad range of browsers, including Chrome, Firefox, Edge, Brave, Wavebox, Vivaldi, and more.

The best place to get started is with the AiBrow docs, or by using the sample code below to first check if the extension is installed, help the user install it and then run your first prompt.

if (!window.aibrow) {

// The AiBrow extension isn't installed. Use the easy install URL that after

// the install, will redirect back to your page.

//

// You may want to prompt the user before doing this

window.location.href = `https://aibrow.ai/install?redirect_to=${window.location.href}`

return

}

const { helper } = await window.aibrow.capabilities()

if (!helper) {

// The AiBrow extension also needs a native helper installed. After the extension

// is installed, users are normally prompted to install it. If, however they haven't

// and return to your page you can redirect them back to the install page. AiBrow

// will provide the right instructions for what they need to do to complete install

window.location.href = `https://aibrow.ai/install?redirect_to=${window.location.href}`

return

}

// AiBrow is all setup, let's create a session and start prompting. Models from Hugging

// Face, need to be in the GGUF format. Locate the download link to the model to use this

const session = await window.aibrow.languageModel.create({

model: 'https://huggingface.co/bartowski/Qwen2.5-7B-Instruct-GGUF/resolve/main/Qwen2.5-7B-Instruct-Q4_K_M.gguf'

})

// You can now prompt the session and get a stream of text back

const stream = await session.promptStreaming('Why is the sky blue?')

for await (const chunk of stream) {

console.log(chunk)

}

This snippet will take the user from installation all the way through to prompt and output! Remember, AiBrow pre-ships with SmolLM 1.7B if you don't need to download your own model to get going, only if you use another specific HF model. Once AiBrow has been installed by the user, and your model downloaded for the first time, this will be available for all sites speeding up their setup the next time.

What can Hugging Face models do in the browser?

When you use AiBrow and a hugging face model, you immediately have access to the languageModel, embedding and coreModel APIs.

Language Model API

The language model API allows you to converse with the language model in an assistant/user pattern. It supports system prompts, and initial conversations as well as grammar for structured output and can be used in situations like the email subject generator demo.

Embedding API

The embedding API provides a method for turning any piece of text into an embedding. The embeddings can then be stored and searched over to find similar text to a new input. We recommend using a purpose-trained embedding model for this, as these normally provide better results.

// Create the session

const session = await window.aibrow.embedding.create({

model: 'https://huggingface.co/second-state/All-MiniLM-L6-v2-Embedding-GGUF/blob/main/all-MiniLM-L6-v2-Q8_0.gguf'

})

// Generate embeddings for known data

const data = {

'1': 'data1',

'2': 'data2',

...

}

const dataIds = Object.keys(data)

const vectors = await session.get(dataIds.map((id) => data[id]))

const embeddings = dataIds.map((id, index) => ({ id, vector: vectors[index] })

// Sort the list of embeddings by the most similar

const search = await session.get('search data')

const results = session.findSimilar(embeddings, search)

console.log(data[results[0].id])Core Model API

The coreModel API is a lower-level API that exposes the underlying model to the API and passes your prompts and options directly through. This means you need to take care of things like prompt templating yourself, but it gives you more power to constrain the language model in new ways.

Why use the AiBrow extension?

The AiBrow extension provides an easy-to-use, out-of-the-box API, along with some lower-level APIs for more complex use cases. As it uses a native helper rather than WebGPU, it has near-metal performance, better memory utilization, and broader platform support. AiBrow supports an extensive range of models, with model-sharing across sites reducing the burden of user downloads, and is open-source on GitHub.