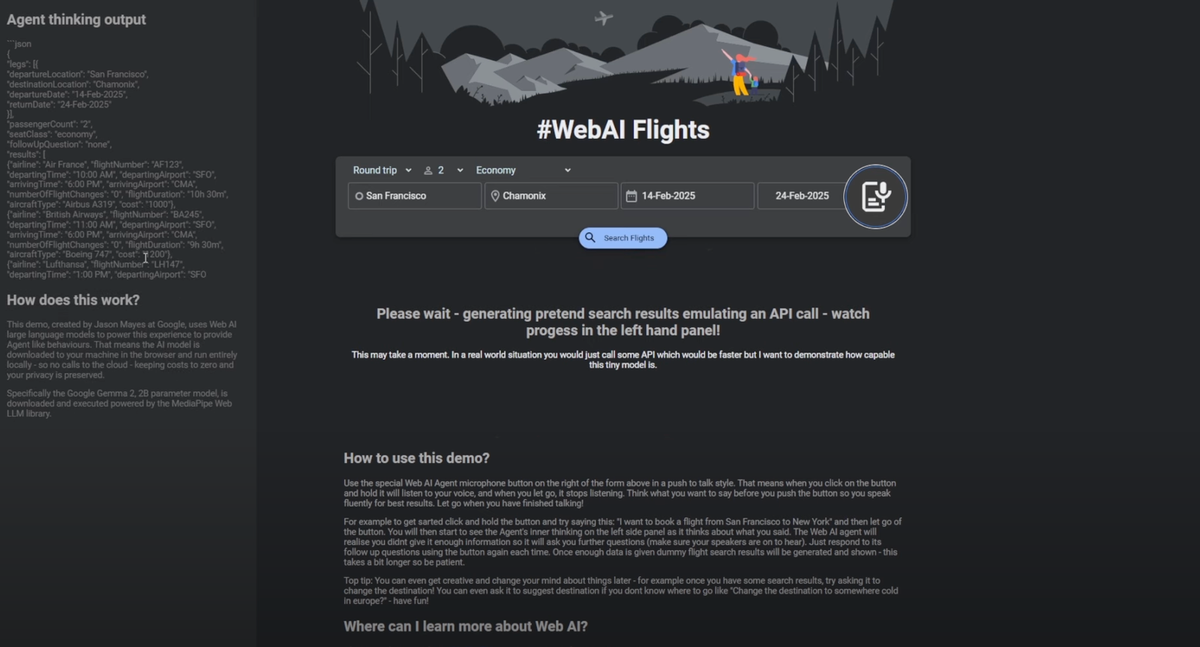

Jason Mayes posted an exciting demo this week, showing an idea that he'd had about using AI Agents to book flights. What made the demo particularly interesting is that it used a Gemma 2, 2B parameter model running locally in the browser.

In the demo, you can talk to the virtual booking agent using the web speech APIs to set out what type of trip you want. The speech API takes your input and uses the LLM to ask follow-up questions or generate some dummy search results (as if it were the Google flights API). Try it out on CodePen!

I thought this was an interesting intersection of some existing web APIs and AI to give a sneak peek at what Web 4.0 could look like, where agents do the repetitive work of form filling and querying for you. After looking through Jason's code, one thing that really stood out was the size of the prompts. The prompt that's used by the booking agent comes in at approx 1,000 tokens (or 566 words), it goes through everything from how the LLM should behave, to some rules it should abide by, example inputs, outputs and what the output JSON should look like.

This prompt probably took some tweaking to get it to behave as expected and I had a suspicion that given a well-crafted user input, it could go off-script and generate something other than a good output. I thought there must be an easier way rather than pleading with the prompt to get what you want!

Using Grammar

If you use grammar with language models, you can programmatically define and constrain what the output should be and easily ensure that the LLM always gives something reasonable. In our case, JSON in a specific structure.

I used the open-source AiBrow extension so that I could still run Gemma 2, 2B locally on the device, but also so I could define a JSON output schema for the LLM. This worked fantastically well and it meant I could be lazy about writing my new much shorter prompt (approx. 150 tokens), getting good outputs from the LLM on the first iteration...

You are a travel bot API who needs to book flights for people and your task is to fill the JSON object from the users input so we can search for a flight.

The user will give you some free text and you should do your best to update the JSON object. The current JSON object is:

${JSON.stringify(formToObj())}

If you need more information or any fields are blank, use the \`followUpQuestion\` field to ask the user for more information & ask for more than one thing at a time, be friendly, but don't use emojis. If you have all the information you need, leave the \`followUpQuestion\` field blank.And here is the grammar that was used to specify the output

{

type: 'object',

properties: {

passengerCount: { type: 'integer' },

seatClass: {

type: 'string',

enum: ['economy', 'premium economy', 'business', 'first']

},

legs: {

type: 'array',

minItems: 1,

maxItems: 2,

items: {

type: 'object',

properties: {

departureLocation: { type: 'string' },

destinationLocation: { type: 'string' },

departureDate: { type: 'string' },

returnDate: { type: 'string' }

},

required: ['departureLocation', 'destinationLocation', 'departureDate', 'returnDate'],

additionalProperties: false

}

},

followUpQuestion: { type: 'string' }

},

required: ['passengerCount', 'seatClass', 'legs'],

additionalProperties: false

}The output grammar inadvertently contains additional context for the LLM, as when it starts to generate the fields it becomes statistically more likely to fill them in from the input data. This means, for example, I don't need to tell the LLM that there can be multiple legs and each one should have a departure and destination, it just becomes statistically likely to do this as it computes the output.

As Web 4.0 develops during 2025, I'm sure we'll see structured outputs become a key part of ensuring agents can efficiently consume and direct LLM execution towards their agentic goals.

You can give my grammar demo a try on CodePen too (it's a bit hacked around, but it's an interesting proof of concept!). Make sure you download the AiBrow extension first!